Our company is actively exploring the possibilities of large language models (LLMs), such as ChatGPT created by OpenAI. As we are operating in most European countries, we naturally explore multi-language solutions that these models offer us.

Using LLMs in some languages is more expensive

It may not be a well-known fact, but the cost of OpenAI services is much higher for other languages than English. This is based on the fact that OpenAI bills users in tokens (sub-word units) and not in characters or words.

For English, the token count is very close to the number of words. For other languages, the count rises significantly, sometimes more than twice. For example, the Preamble to the Universal Declaration of Human Rights has 360 tokens in English, but 724 tokens in Czech, despite having far fewer words (248 in Czech to 320 in English). It rises even higher in languages using non-Latin scripts.

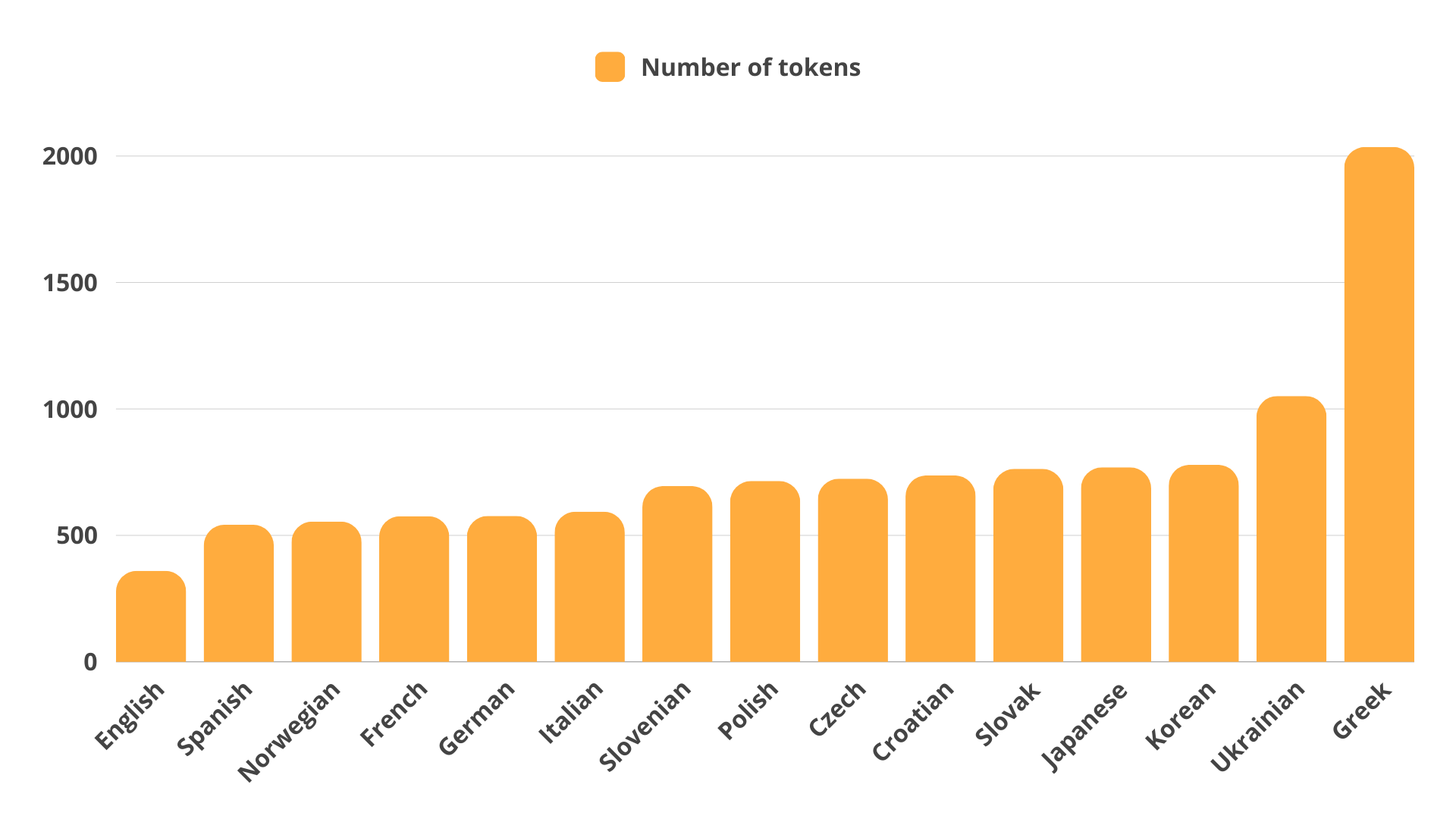

The graph below shows the number of tokens for the same text in different languages.

What is the cause?

We initially thought the difference could be attributed to diacritics and/or character encoding. Czech, as well as other Slavic languages, uses a lot of characters with diacritics not present in English (most famous being ě, ř or ů). Curiously enough, this is not the whole picture. The main factor is representation of the language in the training data. The more words from the particular language are included in the training data, the less sub-word units the model needs to represent a particular word.

For example, Czech words “baby” (slang for women) or “copy” (braids) would be tokenized with one token, since they are homographs of English words.

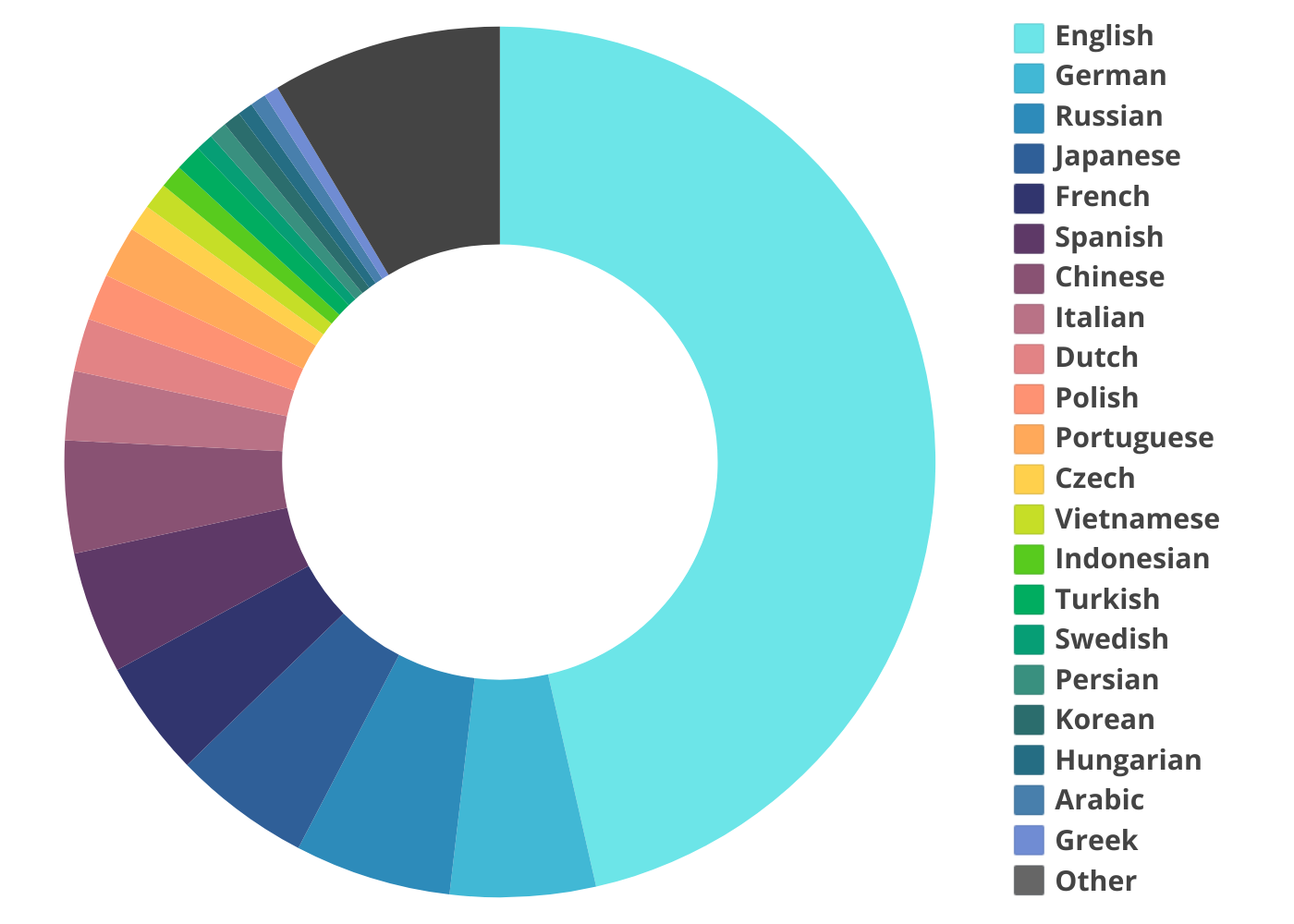

We do not know for certain how languages are represented in training data of GPT models, since OpenAI does not disclose that information. However, we can form an idea based on the language distribution in the Common Crawl corpus (database of text documents) containing petabytes of data collected from the internet since 2008, which was used for training the GPT-3 model and almost certainly its successors as well.

As you can see, the training data is heavily English-centered, with only small portions of the corpus dedicated to other languages. The tokenization disproportion shown above is a clear consequence of this – when processing the corpus, the text needs to be compressed to smallest possible units, greatest common divisors, we might say. And because a large part of the texts are in English, most tokens will correspond to English words or roots. Words in other languages need to be represented by much smaller tokens (sometimes even corresponding to individual characters).

Real-world consequences

As academic as it may sound, this fact has some very practical consequences as to the price of using LLMs in our services. Not only would the price of an identical activity (such as summarizing or translating the Universal Declaration of Human Rights) rise substantially depending on the language used – 1.5× in Spanish, 2× in Czech, 3× in Ukrainian and almost 6× in Greek – but it would also lead to increased latency (delay between sending the instruction and receiving the answer).Moreover, because the context that can be provided to the models, as well as length of the output, is restricted by the number of tokens, this effectively limits the quality of OpenAI services in non-English languages.

What can be done?

Researchers have recommended several courses of action to make LLM services accessible and equal across languages and to address this bias, see for example this article by Petrov et al. (2023). Among other things, the authors suggest building and using multilingual corpora with diverse topics and proper names, as well as multilingually fair tokenizers.

How do we approach it?

The Beey app, our main product for transcribing speech to text, is not based on LLM models as such. Our AI speech recognition models are trained on data from all languages, and we are always gathering more to ensure high quality of transcription in all of them.

However, connecting Beey transcription with ChatGPT allows you to unlock your audio content. OpenAI services are included in some of the secondary features of Beey – notably summarization, which you can use to summarize parts of the transcribed text. Since Beey supports multiple languages, the summaries must be made available for both input and output languages. Our company aims to be language-inclusive and fair to all customers, therefore Beey does not differentiate prices by language, and all additional costs are covered by us.